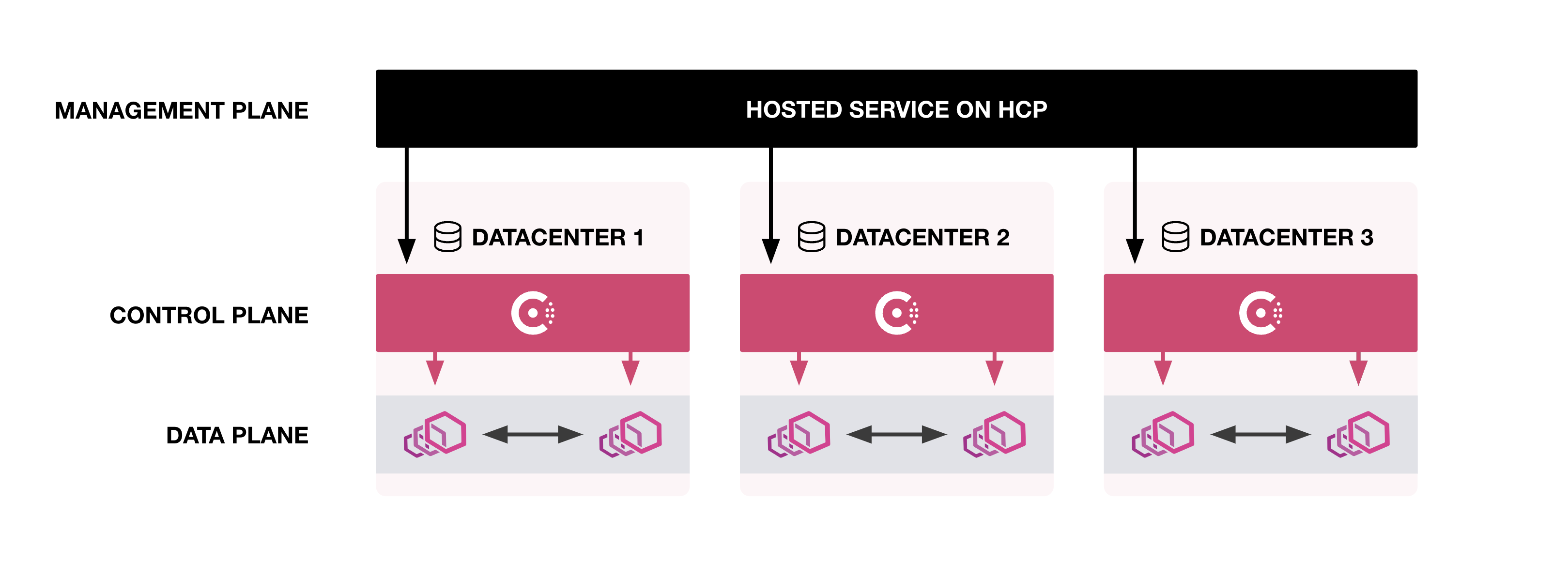

Management plane

This topic provides an overview for HCP Consul's hosted management plane service. The management plane is an interface that centralizes global management operations across all Consul clusters. It provides global visibility and control for both self-managed and HashiCorp-managed Consul clusters, even when you deploy services in multiple cloud environments and regions.

What is management plane?

The HCP Consul management plane is a hosted service that enables you to monitor and manage multiple Consul server clusters regardless of where the clusters are hosted. It enables you to view aggregated health information for your clusters and services from a single location.

The management plane service provides the following views into your deployments:

You can also use the management plane to link self-managed clusters to HCP.

To learn more about the security model for the management plane service, refer to the HCP Security overview.

Consul overview

The unified overview provides an aggregated summary of both HashiCorp-managed and self-managed clusters, including their associated services. It displays overall cluster deployment information, service counts, and service instance health.

Version information for clusters also appears. When a cluster runs a version of Consul that is not the current release, a version badge appears to help you identify clusters to update. The Consul management plane may display the following badges:

- Out of date: The Consul version is not the latest release, but it is still supported because it is within two major releases.

- Out of support: The Consul version is no longer supported because two or more major releases occured since the last update. To learn how to upgrade your cluster with HCP Consul, refer to upgrades.

Cluster details

You can get detailed information about your HashiCorp-managed and self-managed clusters in HCP Consul and directly access the Consul UI for each cluster from their cluster details.

The cluster details page provides the following information about your self-managed cluster:

- Number of registered services

- Number of service instances

- Number of sidecar proxies

- Number of clients

- Cluster status

- TLS status and expiration date

- Number of active and healthy servers

- Failure tolerance

The service and service instance counts reported on this page include the consul service that is automatically deployed with every Consul server.

You can also find detailed information about individual servers in each cluster. This information includes:

- Server name

- Consul version

- Raft state

- Server ID

- LAN address

- Gossip port

- RPC port

- Time elapsed since last contact with server

In the current release, the cluster details page may not display details for every self-managed Consul server in the cluster, even though the servers are running.

To access the Consul UI for a cluster so that you can set up intentions or generate token, click Access Consul on the cluster details page. Refer to View the Cluster's Consul UI for additional information.

Access privileges to the Consul UI are determined by a user's access role in the HCP platform. There are three HCP roles: admin, contributor, and viewer. Users with admin or contributor roles have create, read, update, and delete (CRUD) access to Consul clusters. The viewer role is limited to view-only access to the Consul UI. HCP automatically generates tokens for UI access based on a user's HCP permission. For more information on access roles, refer to user permissions.

Services

The unified services overview contains information about services and the associated service instances deployed on your network. You can use it to search for a service name, which returns a list of clusters where the instance is deployed. In addition to listing services and grouping services that share a name, it provides the following global network information about services instances:

- Cluster IDs for the HCP Consul server the service is registered to

- Admin partition name for each service instance

- Namespace for each service instance

- The number of instances connected to Consul Clusters

- The health of a service on the cluster

Service instance health is determined by the response code Consul receives when it attempts to contact the instance. Health states indicate the following response codes:

- Passing: A successful 200-299 code

- Warning: A 429 return code

- Critical: All other response codes

Cluster peering

Beta feature

The management plane's cluster peering features are in a beta release. Refer to the HCP Consul cluster peering overview for HCP-specific requirements and guidance.

The cluster peering view lists active cluster peering connections between your clusters. You can use it create new cluster peering connections, check the status of individual connections between clusters, and confirm the number of services that are available to other clusters.

For each cluster with at least one existing cluster peering connection, the following information is available:

- Name of the cluster with cluster peering connections

- Name of the admin partition with the cluster peering connection

- Number of peers connected to that cluster and admin partition

When you click the name of a cluster, the management plane displays additional details about each cluster peering connection. The following information about individual connections is available:

- Name of each peer with an active connection

- Names of cluster and admin partition for each peer

- Status of each cluster peering connection

- Heartbeat, or the time elapsed since the management plane detected a connection and updated the status

- Number of services the cluster imported from the peer

- Number of services exported from the cluster to the peer

To appear in the list of cluster peering connections, you must create the cluster peering connection using the management plane. Externally created cluster peering connections can interact with clusters that have connections created through the management plane, but they do not appear in the cluster peering view.

For more information about using the management plane to create cluster peering connections, refer to create cluster peering connections in the HCP documentation. For more general information about cluster peering, refer to cluster peering overview in the Consul documentation.

Connection status

HCP displays one of the following statuses to indicate the current state of the cluster peering connection:

Connecting

The Connecting status appears after you create a cluster peering connection. It indicates that HCP is attempting to exchange peering tokens to establish connectivity between the clusters.

If the status does not change automatically after 5 to 10 minutes, it indicates an issue in the setup process. Check each of the following:

- The cluster names and admin partitions you selected are the intended peers.

- The clusters each belong to a cluster tier that supports network connectivity with the other's region and cloud provider.

- The self-managed clusters were already linked to your HCP account.

After you determine the issue, delete the connection and attempt to establish a new one.

Active

The Active status indicates that the peering tokens were exchanged and the cluster peering connection passed a health check. When a connection's status is Active, you can export services to make them available to peers with active cluster peering connections.

Failing

The Failing status indicates that the cluster peering connection failed its most recent health check and services are not available to peers. This status can appear if you remove a cluster from your network without deleting its cluster peering connection.

Deleting

The Deleting status indicates that the cluster peering connection was deleted from the peer. When you delete a connection from one of the clusters, its peering tokens and imported services are also deleted.

If ending the cluster peering connection was intentional, click More (three dots) and then Delete connection to remove it from the list.

If ending the cluster peering connection was not intentional, delete the connection to remove it from the list. Then, click Create cluster peering connection to restart the process for establishing a cluster peering connection.

Benefits

The management plane service can improve your experience with HCP Consul in the following ways:

- Centralized operations: Reduces operational overhead by enabling operators and SREs to visualize and monitor the health of multiple Consul server clusters at once.

- Unified service catalog: Collects location and health information for your clusters' service instances in a single aggregated service catalog. Search for a service by name and then find clusters where service instances are deployed.

- Secure Consul configuration: The management plane service helps you deploy Consul clusters with TLS, gossip encryption, and ACL tokens enabled by default.

- Secure and easy UI access: Eliminates the need to set up additional load balancers to your Consul cluster and is especially useful for Consul servers running in air-gapped environments with highly restrictive network controls.

- Simplified cluster peering workflow: Eliminates the need to access individual Consul clusters for cluster peering setup. This feature is in beta release.

Get started

To get started with the HCP Consul management plane, sign in to the HCP portal. Select Consul or View Consul to access your organization's management plane.

Prerequisites

To use the management plane service, your Consul clusters must run Consul v1.14.3 or later.

Bootstrapping a self-managed cluster to HCP Consul also requires the latest version of consul-k8s. To learn how to install the latest version, refer to the Consul on Kubernetes documentation.

Deploy HashiCorp-managed clusters

When you use HCP Consul to create HashiCorp-managed clusters, they automatically connect to the management plane service. You can create HashiCorp-managed clusters with Terraform, or you can manually them through the UI workflow. Refer to Clusters for more information.

For step-by-step instructions on using HCP Consul to deploy clusters, refer to the deploy HCP Consul tutorial.

Link self-managed clusters to HCP

Linking local clusters that you manage to HCP enables you to use the management plane service to access and monitor them. In the current release, the HCP Consul management plane only supports linking self-managed Consul clusters that were originally deployed on Kubernetes by the management plane. Linking to existing Kubernetes deployments is not supported.

To link your self-managed cluster with HCP:

From the HCP Consul overview, click Deploy Consul.

Select Self-managed Consul and click Get Started.

Enter a name for your cluster and click Continue. This name must have 3 to 36 characters and be unique to your organization. It may include numbers, hyphens, and lowercase letters, but it must start with a letter and end with a letter or a number.

HCP generates code with credentials to authenticate and connect your Kubernetes cluster with HCP Consul. These credentials include TLS, gossip encryption, and a bootstrap token. HCP stores these secrets in a HashiCorp-managed Vault. The TTL on the TLS is one year.

Copy the code and run it in the CLI. Consul on Kubernetes creates a

consulnamespace and stores your credentials as Kubernetes secrets under this namespace. The CLI then uses these secrets to install Consul in the same namespace.After running the code provided by HCP, click I've done this and then wait for your cluster to connect to HCP Consul.

Do not modify the management token generated by HCP. In the event of a disaster, a modified management token may prevent recovery.

To uninstall self-managed clusters that you bootstrap with HCP Consul, run the following command:

Troubleshooting

You may encounter the following error when attempting to bootstap a cluster in EKS using the code that HCP Consul provides:

This error occurs when using outdated versions of the AWS CLI and IAM authenticator. Upgrade both to the latest version, and then run the code provided by HCP to complete the bootstrap process.

HashiCups demo app

By default, the code you copy from HCP includes a -demo flag. This flag deploys the HashiCups application so that you can view management plane features in action immediately. If you prefer not to install the sample app, remove the -demo flag before you run the command.

To access the HashiCups app, run the following command to make it accessible in a browser at http://localhost:8080:

To remove the demo app after installation, run the following CLI command:

Update the bootstrapped self-managed clusters

You can update your self-managed clusters' config using the following steps. This is helpful if you want to update the Consul image version or enable features like cluster peering.

Retrieve the configuration used for initial bootstrapping.

Copy the config into a YAML file then update it to your desired configuration. The following is an example of updating the consul version to

1.14.3.Refer to the

consul-k8shelm chart reference and helm chart examples for more information.

Run the

consul-k8sCLI upgrade command.